Sensor

|

| Sensor-cleaning mode on this DSLR reveals the CMOS imaging chip at its heart image by Voxphoto (Image rights) |

The word "sensor" has many meanings in different branches of technology. But in the context of a digital camera it refers to the semiconductor device placed at the focal plane of a camera, i.e. where earlier cameras would have held film.

A camera sensor is minutely divided into millions of microscopic cells. These absorb light and create electrical charge, in proportion to the intensity of the illumination. The electrical levels are digitized and stored, and the numerical values associated with each pixel represent the light and dark patterns of the photographed scene—a digital photo.

Technologically, the semiconductor type may be a CCD (the digital sensor technology that evolved first) or a CMOS design. Each has specific advantages; but CMOS lends itself better to fast read-out rates, and is becoming increasingly dominant as cameras add high-definition video to their features lists.

By sales volume, by far the largest consumers of sensor chips are cell-phone handset manufacturers—a market where there is ruthless competition to miniaturize entire camera subsystems to the size of a lentil. In contrast, "DSC" sensors (digital stills cameras) are seen as something of a higher-priced specialty product.

Contents

Color

As a plain CCD or CMOS sensor will only produce a greyscale image, further developments were needed for them to see in color. Some current video cameras, and a few early stills cameras (e.g. the Minolta RD-175) used more than one CCD—with a color-separation prism or filter directing different colors of light to the individual sensors. This is analogous to the filter-separation technique used one hundred years ago for creating color images using black and white film.

But the color technology used in almost all digital camera sensors today was developed in 1975 at Kodak by Dr. Bryce Bayer, who had an idea for putting a separate color filter overtop each light-sensitive pixel of the chip. A repeated four-pixel pattern with two green-, one blue- and one red-filtered area, the Bayer filter, is nearly universal today.

This color filter array (sometimes abbreviated CFA) means that the sensor image cannot be used directly. Instead, image processing is needed to "demosaic" the image—i.e. interpolate the color information for pixels in between the ones recording each specific color. This results in some loss of resolution, and perhaps in unwanted "maze" artifacts or rainbowing in fine details. Nonetheless, current demosaicing algorithms can recover most of the luminance resolution of the sensor.

Some anomalous CCD designs exist. Fujifilm's SuperCCD design uses a honeycomb technique to increase light sensitivity. Foveon's X3 CCDs produce color through an alternative process which layers RGB sensitive photosites on top of each other instead of in horizontal rows. This produces output with lower total pixel counts but higher per-pixel resolution than can be produced from a standard color filter CCD. Overall color quality is high but noise has been a persistent issue.

Kodak has also begin marketing CCDs with a filter array using some "clear" (unfiltered) pixels[1], to gain some sensitivity otherwise lost to filter light absorption.

Noise

A photographic sensor is in effect a photon counter. At microscopic scales the apparent smoothness of light breaks down into a more random process of individual photons arriving, like drops of rain. Even under uniform illumination, adjacent pixels will intercept different numbers of photons. As the pixel size shrinks, this pixel-to-pixel variation increases. This is known as "shot noise" (by analogy to buckshot) and is the dominant source of image noise in digital photography. For a sensor of a certain desired resolution, say, 12 megapixels, the only way to decrease shot noise is by making the entire chip larger, thus increasing each pixel's light-gathering area.

Sensor Size

|

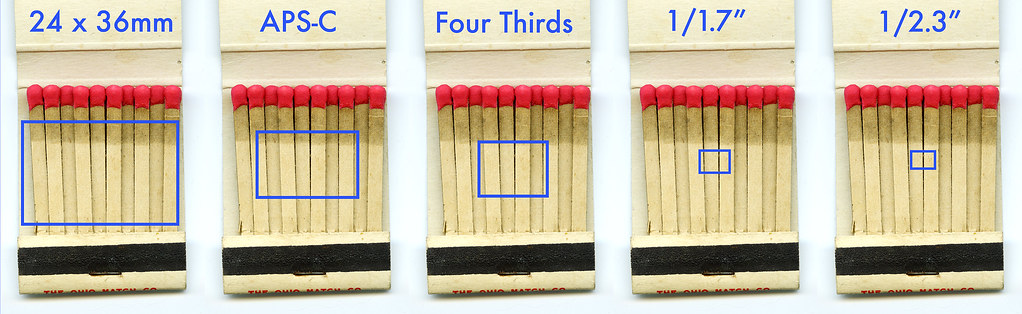

| Blue boxes compare the relative sizes of common sensor formats image by Voxphoto (Image rights) |

The largest possible sensor may be desirable from an image-quality point of view, but increasing a chip's area causes its production costs to mushroom. It also means that lenses, camera bodies, etc., must increase proportionally in bulk and weight. Thus camera makers choose an appropriate trade-off between quality and portability for each intended use and market segment: Sensor chips are manufactured across a great range of sizes. The sensor in a typical smartphone camera may be a tiny 3 x 4mm; while a medium-format digital back may use a chip of 33 x 44mm (while costing as much as a car).

But the great majority of digital stills cameras sold today embody one of the following sensor sizes. Based on actual imaging area, these are:

- 4.5 x 6mm (so-called 1/2.3") — Used in typical compact digital point & shoots

- 5.6 x 7.4mm (so-called 1/1.7") — Used in top-grade compact cameras

- 13 x 16mm — Four Thirds and Micro Four Thirds cameras

- 15 x 23mm (so-called APS-C format) or 16 x 24mm (Nikon's DX sensor) — entry-level to professional DSLR and mirrorless cameras

- 24 x 36mm (so-called "full frame") — top-specification DSLRs

Notes

- ↑ "Color and Light" discussing filter arrays, at Kodak's Plugged in blog (archived).

Links

- Image Sensors World, a blog of sensor industry news

- "Understanding Digital Camera Sensors" with a discussion of Bayer filter arrays and demosaicing, at Cambridgeincolor.com